| 일 | 월 | 화 | 수 | 목 | 금 | 토 |

|---|---|---|---|---|---|---|

| 1 | 2 | 3 | 4 | 5 | 6 | 7 |

| 8 | 9 | 10 | 11 | 12 | 13 | 14 |

| 15 | 16 | 17 | 18 | 19 | 20 | 21 |

| 22 | 23 | 24 | 25 | 26 | 27 | 28 |

| 29 | 30 |

Tags

- datascience

- LDA

- numpy

- 데이터분석전문가

- PCA

- 워드클라우드

- pandas

- 데이터분석

- 언더샘플링

- 데이터불균형

- 빅데이터분석기사

- 독립표본

- 주성분분석

- ADsP

- opencv

- 데이터분석준전문가

- 대응표본

- Python

- 파이썬

- 군집화

- t-test

- 텍스트분석

- 빅데이터

- iloc

- 크롤링

- ADP

- Lambda

- 오버샘플링

- dataframe

- DBSCAN

Archives

Data Science LAB

[Deep Learning] 배치 정규화 (Batch Normalization, BN) 본문

728x90

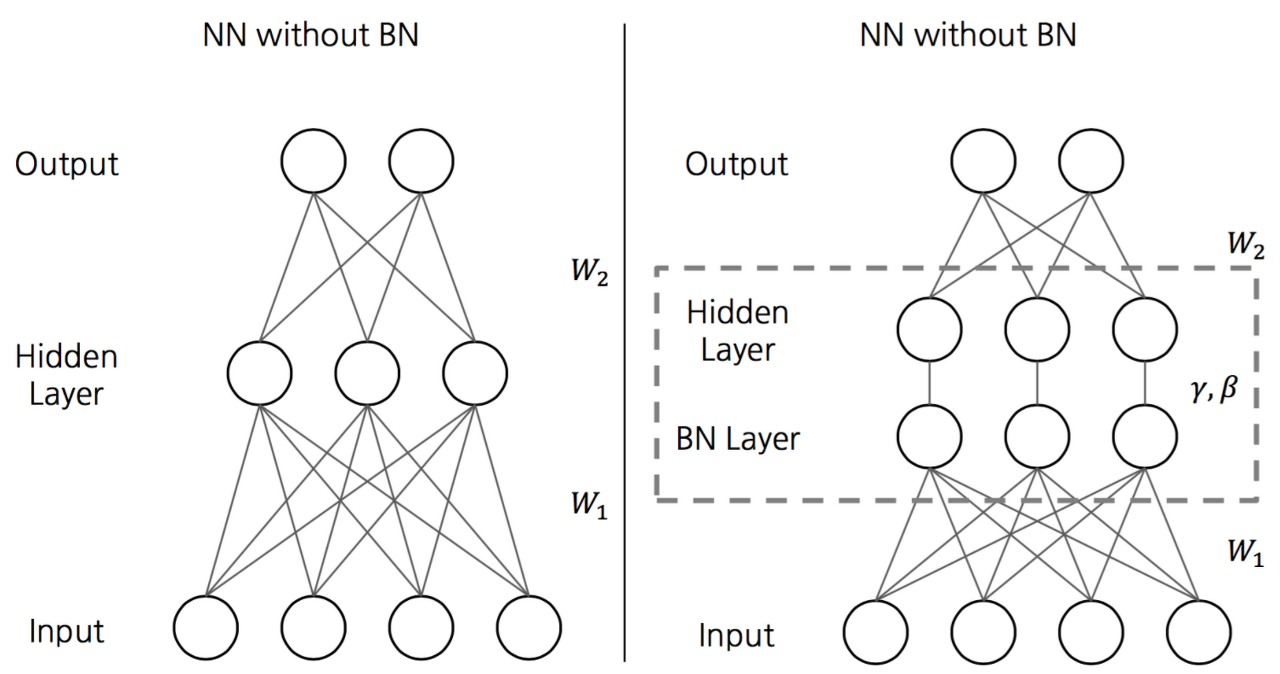

기존의 Gradient Vanishing, Exploding 문제를 해결하기 위해서는 새로운 활성화 함수를 찾거나 가중치를 초기화하는 방법을 사용

-> 훈련하는 동안 다시 발생하지 않으리란 보장 ❌

이에 2015년 세르게이 이오페와 치리슈티언 세게지가 배치정규화 기법 제안

- Batch Normalization

- Batch : 신경망 학습 시 보통 전체 데이터를 작은 단위로 분할하여 학습을 진행하는데, 이 때 사용되는 단위

- Batch Normalization : 배치 단위로 정규화함

- 각 층에서 활성화 함수를 통과하기 전이나 후에 모델에 연산을 하나 추가

- 이 연산은 단순하게 입력을 원점에 맞추고 정규화한 다음, 각 층에서 두 개의 새로운 파라미터로 결괏값의 스케일을 조정하고 이동시킴

- 신경망의 첫 번째 층으로 배치 정규화를 추가하면 훈련 세트를 표준화할 필요 없음

- 훈련하는 동안 배치 정규화는 입력을 정규화한 다음 스케일을 조정하고 이동 시킴

즉 입력 데이터를 원점에 맞추고 정규화하기 위해서는 평균과 표준편차를 추정해야 함

배치 정규화의 장점

1. 학습 속도를 빠르게 할 수 있음

2. 가중치 초기화에 대한 민감도를 감소 시킴 (hyper parameter의 설정이 좀 더 자유로워 짐)

3. 모델의 일반화(regularization) 효과

케라스로 구현한 배치 정규화 예시

model = tf.keras.Sequential([

keras.layers.Flatten(input_shape=[28,28]),

keras.layers.BatchNormalization(),

keras.layers.Dense(300, kernel_initializer='he_normal', use_bias=False),

keras.layers.BatchNormalization(),

keras.layers.Activation('relu'),

keras.layers.Dense(100, kernel_initializer='he_normal', use_bias=False),

keras.layers.BatchNormalization(),

keras.layers.Activation('relu'),

keras.layers.Dense(10,activation='softmax')

])728x90

'🧠 Deep Learning' 카테고리의 다른 글

| [Deep Learning] 합성곱 신경망 CNN (Convolution Neural Network) 정리 (0) | 2022.12.10 |

|---|---|

| [Deep Learning] 딥러닝에서 super().__init__ 사용 (클래스 상속) (0) | 2022.12.07 |

| [Deep Learning] Tensorflow에서 Sequential 모델 생성하는 법 (0) | 2022.11.24 |

| [Deep Learning] 출력층 설계 (softmax, 항등 함수) (0) | 2022.11.23 |

| [Deep Learning] 활성화 함수 종류 및 비교 정리 (0) | 2022.11.21 |

Comments