| 일 | 월 | 화 | 수 | 목 | 금 | 토 |

|---|---|---|---|---|---|---|

| 1 | 2 | 3 | ||||

| 4 | 5 | 6 | 7 | 8 | 9 | 10 |

| 11 | 12 | 13 | 14 | 15 | 16 | 17 |

| 18 | 19 | 20 | 21 | 22 | 23 | 24 |

| 25 | 26 | 27 | 28 | 29 | 30 | 31 |

Tags

- numpy

- LDA

- opencv

- 독립표본

- iloc

- 워드클라우드

- 데이터불균형

- 군집화

- Lambda

- 데이터분석

- 빅데이터분석기사

- 크롤링

- datascience

- 빅데이터

- 파이썬

- DBSCAN

- PCA

- dataframe

- pandas

- ADP

- t-test

- ADsP

- 데이터분석전문가

- 주성분분석

- 데이터분석준전문가

- 오버샘플링

- 대응표본

- 텍스트분석

- 언더샘플링

- Python

Archives

Data Science LAB

[Deep Learning] 최적화 방법 비교 (SGD, Momentum, AdaGrad, Adam 본문

728x90

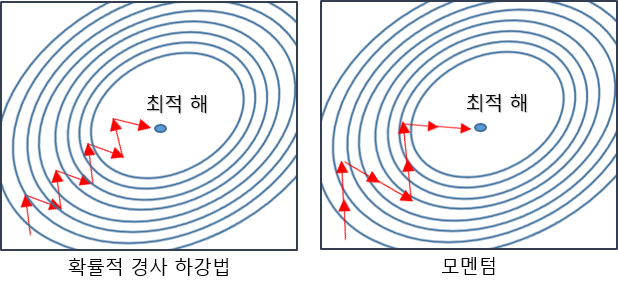

1. SGD (확률적 경사 하강법)

- 매개 변수의 기울기를 구해 기울어진 방향으로 매개변수 값을 갱신하는 일을 몇 번이고 반복하여 최적의 값에 다가가는 것

- 경사 하강법과는 다르게 랜덤하게 추출한 일부 데이터에 대해서만 가중치를 조절함

SGD의 단점

: 비등방성 함수(기울기가 달라지는 함수)에서는 탐색 경로가 비효율적임

- 파이썬 코드 구현

class SGD:

def __init__(self, lr=0.01):

self.lr = lr

def update(self, params, grads):

for key in params.keys():

params[key] -= self.lr * grad[key]

2. Momentum (모멘텀)

- 모멘텀은 '운동량'을 뜻하는 단어로 기울기 방향으로 힘을 받아 물체가 가속된다는 물리 법칙을 이용해 공이 그릇의 바닥을 구르는 듯한 움직임을 보여줌

- 경사 하강법과 마찬가지로 매번 기울기를 구하지만, 이전의 방향을 참고하여 (+,-) 같은 방향으로 일정 비율만을 수정되게 하는 방법

- +, - 방향이 번갈아가며 나타나는 지그재그 현상을 줄이고, 이전 이동 값을 고려해서 일정 비율만큼 다음 값을 결정하므로 관성의 성질을 이용할 수 있음

- 파이썬 코드 구현

class Momentum:

def __init__(self, lr=0.01, momentum=0.9):

self.lr = lr

self.momentum = momentum

self.v = None

def update(self, params, grads):

if self.v is None:

self.v = {}

for key, val in params.items():

self.v[key] = np.zeros_like(val)

for key in params.keys():

self.v[key] = self.momentum * self.v[key] - self.lr * grads[key]

params[key] += self.v[key]

3. AdaGrad

- 개별 매개변수에 적응적으로 학습률을 조정하며 학습을 진행함

- 변수의 업데이트 횟수에 따라 학습률을 조절하는 옵션이 추가된 방법

- 많이 변화하지 않은 변수들의 학습률을 크게하고, 많이 변화된 변수들의 학습률을 작게함 (변화한 변수는 최적값에 근접했을 것이라는 가정하에 작은 크기로 이동하면서 세밀한 값을 조정하고, 반대로 적게 변화한 변수들은 학습률을 크게하여 빠르게 loss값을 줄임)

- 파이썬 코드 구현

class AdaGrad:

def __init__(self,lr=0.01):

self.lr = lr

self.h = None

def update(self, params, grads):

if self.h == None:

self.h = {}

for key, val in params.items():

self.h[key] = np.zeros_like(val)

for key in params.keys():

self.h[key] += grads[key] * grads[key]

params[key] -= self.lr * grads[key] / (np.sqrt(self.h[key]) + 1e-7)

4. Adam

- Momentum과 AdaGrad의 장점을 융합

- 하이퍼 파라미터의 편향 보정

- 파이썬 코드 구현

class Adam:

def __init__(self, lr=0.001, beta1=0.9, beta2=0.999):

self.lr = lr

self.beta1 = beta1

self.beta2 = beta2

self.iter = 0

self.m = None

self.v = None

def update(self, params, grads):

if self.m is None:

self.m, self.v = {}, {}

for key, val in params.items():

self.m[key] = np.zeros_like(val)

self.v[key] = np.zeros_like(val)

self.iter += 1

lr_t = self.lr * np.sqrt(1.0 - self.beta2**self.iter) / (1.0 -self.beta1**self.iter)

for key in params.keys():

self.m[key] += (1 - self.beta1) * (grads[key] - self.m[key])

self.v[key] += (1 - self.beta2) * (grads[key] - self.v[key])

params[key] -= lr_t * self.m[key] / (np.sqrt(self.v[key]) + 1e-7)

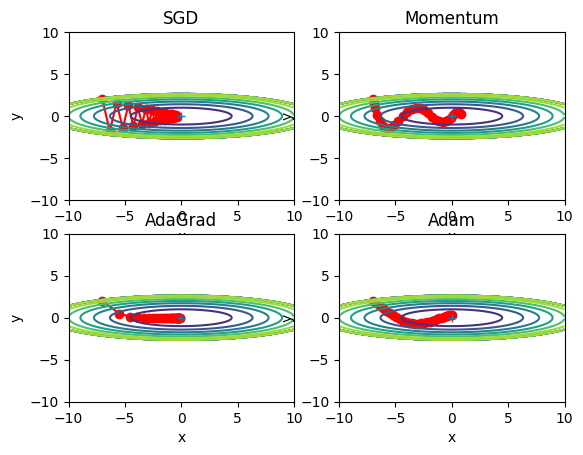

4가지 방법의 최적화 방법 비교

데이터의 종류에 따라 맞춰 선택해야함

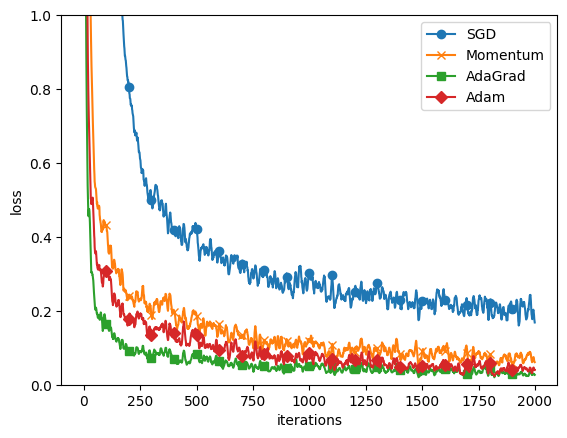

학습 진도 별 비교

가장 유명한 데이터 셋인 MNIST 에 각 최적화 방식을 적용했을 때의 학습 진도를 나타낸 그림이다.

MNIST에는 AdaGrad가 미세하게 가장 좋아보이는 것을 확인할 수 있다.

728x90

'🧠 Deep Learning' 카테고리의 다른 글

| [Deep Learning] 딥러닝에서 super().__init__ 사용 (클래스 상속) (0) | 2022.12.07 |

|---|---|

| [Deep Learning] Tensorflow에서 Sequential 모델 생성하는 법 (0) | 2022.11.24 |

| [Deep Learning] 출력층 설계 (softmax, 항등 함수) (0) | 2022.11.23 |

| [Deep Learning] 활성화 함수 종류 및 비교 정리 (0) | 2022.11.21 |

| Deep Learning과 Machine Learning 차이점 (0) | 2022.02.13 |

Comments