| 일 | 월 | 화 | 수 | 목 | 금 | 토 |

|---|---|---|---|---|---|---|

| 1 | 2 | 3 | 4 | |||

| 5 | 6 | 7 | 8 | 9 | 10 | 11 |

| 12 | 13 | 14 | 15 | 16 | 17 | 18 |

| 19 | 20 | 21 | 22 | 23 | 24 | 25 |

| 26 | 27 | 28 | 29 | 30 | 31 |

- 텍스트분석

- 데이터분석

- DBSCAN

- pandas

- 언더샘플링

- Lambda

- t-test

- 워드클라우드

- iloc

- LDA

- 빅데이터분석기사

- ADP

- datascience

- 파이썬

- Python

- 대응표본

- 독립표본

- 군집화

- 크롤링

- opencv

- 주성분분석

- ADsP

- 오버샘플링

- 데이터분석준전문가

- 빅데이터

- dataframe

- PCA

- numpy

- 데이터불균형

- 데이터분석전문가

Data Science LAB

[Python] SVD(Singular Value Decomposition) 본문

SVD 개요

SVD는 PCA와 비슷하게 행렬 분해 기법을 이용하지만, PCA는 정방행렬만을 고유 벡터로 분해하는 반면, SVD는 행과 열이 다른 모든 행렬에 적용할 수 있다.

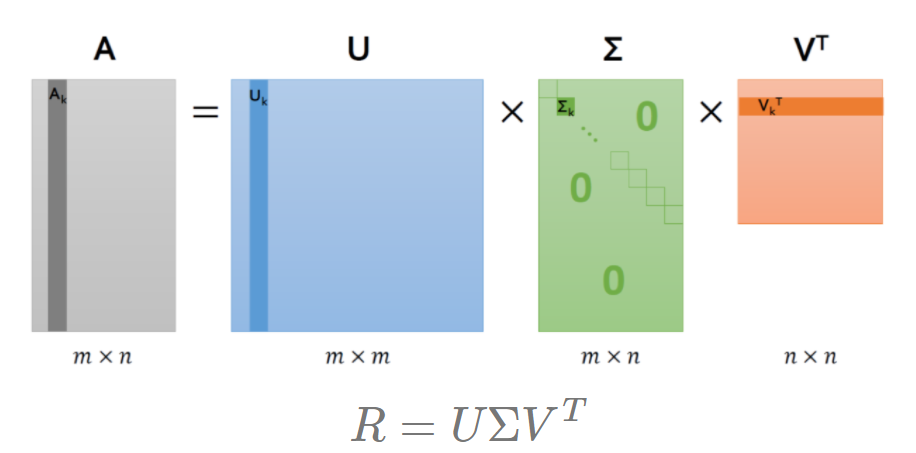

일반적으로, SVD는 m×n 크기의 행렬 A를 다음과 같이 분해하는 것을 의미한다.

SVD는 특이값 분해라고도 불리며, 행렬 U와 V에 속하는 벡터는 특이벡터이다. 모든 특이벡터는 서로하는 성질을 가진다.

U : m×m 크기의 행렬, 역행렬이 대칭 행렬

∑ : m×n 크기의 행렬, 비대각 성분이 0

V : n×n크기의 행렬, 역행렬이 대칭

V,U는 직교행렬

랜덤행렬 생성

import numpy as np

from numpy.linalg import svd

np.random.seed(121)

a = np.random.randn(4,4)

print(np.round(a,3))

개별 로우의 의존성을 없애기 위해 랜덤으로 4×4의 랜덤행렬 생성

U,Sigma,Vt 도출

U,Sigma,Vt = svd(a)

print(U.shape,Sigma.shape,Vt.shape)

print("U : \n",np.round(U,3))

print("Sigma Value \n",np.round(Sigma,3))

print("V transpose matrix :\n",np.round(Vt,3))

svd에 파라미터로 원본 행렬을 입력하면, U,Sigma, V전치 행렬을 반환한다.

∑(Sigma)행렬은 대각에 위치한 값만 0이 아니고, 그렇지 않은 경우는 모두 0이므로 0이 아닌 경우만 1차원 행렬로 표현한다.

Sigma를 다시 0을 포함한 대칭행렬로 반환

sigma_mat = np.diag(Sigma)

a_ = np.dot(np.dot(U,sigma_mat),Vt)

print(np.round(a_,3))

Sigma 행렬의 경우 0이아닌 값만 1차원으로 추출하였으므로 0을 포함한 대칭행렬로 변환한 후 내적을 수행해야한다.

U, Sigma, Vt 를 내적하여 원본행렬을 복원한 결과, 원본과 동일하게 복원된 것을 확인할 수 있다.

데이터 로우 간 의존성이 있을 경우

a[2] = a[0]+a[1]

a[3] = a[0]

print(np.round(a,3))

데이터 로우간에 의존성이 있을 경우 어떻게 Sigma 값이 변하고, 이에 따른 차원축소가 진행되는 지 알아보기 위해 a행렬의 3번째 로우를 (첫번째 로우 + 두번째 로우)로 업데이트하고, 4번째는 첫번째 로우와 같도록 업데이트하였다.

다시 SVD 수행해 Sigma 값 확인

U,Sigma,Vt = svd(a)

print(U.shape,Sigma.shape,Vt.shape)

print("Sigma Value : \n",np.round(Sigma,3))

다시 SVD로 분해한 결과, 이전과 차원은 동일하지만, Sigma 값 중 2개가 0으로 변했다. 즉, 선형 독립인 로우 벡터의 개수가 2개라는 의미이다.

#U 행렬은 Sigma와 내적을 수행하므로 Sigma의 앞 2행에 대응되는 열만 추출

U_=U[:,:2]

Sigma_ = np.diag(Sigma[:2])

#V전치 행렬은 앞2행만 추출

Vt_ = Vt[:2]

print(U_.shape,Sigma_.shape,Vt_.shape)

#U,Sigma,Vt의 내적을 수행하며 다시 원본 행렬 복원

a_ = np.dot(np.dot(U_,Sigma_),Vt_)

print(np.round(a_,3))

다시 내적하여 복원한 결과, 정확하게 복원되지는 않았지만, 원본행렬에 가깝게 복원된 것을 확인할 수 있었다.

Truncated SVD

import numpy as np

from scipy.sparse.linalg import svds

from scipy.linalg import svd

#원본 행렬 출력하고 SVD 적용할 경우 U, Sigma, Vt의 차원확인

np.random.seed(121)

matrix = np.random.random((6,6))

print("원본 행렬 : \n",matrix)

U,Sigma,Vt = svd(matrix,full_matrices=False)

print("Sigma 값 행렬 : \n",Sigma)

#Truncated SVD로 Sigma 행렬의 특이값을 4개로 하여 Truncated SVD 수행

num_components = 4

U_tr,Sigma_tr, Vt_tr = svds(matrix,k=num_components)

print("Truncated SVD 분해 행렬 차원: ",U_tr.shape,Sigma_tr.shape,Vt_tr.shape)

print("Truncated SVD Sigma값 행렬 : ",Sigma_tr)

matrix_tr = np.dot(np.dot(U_tr,np.diag(Sigma_tr)),Vt_tr)

print("Truncated SVD로 분해 후 복원 행렬 : \n",matrix_tr)

6×6 행렬을 SVD 분해하면 U,Sigma,Vt가 각각 (6,6),(6,),(6,6)차원이지만,

TruncatedSVD의 n_components를 4로 설정하면 U,Sigma,Vt가 각각 (6,4),(4,),(4,6)차원으로 분해되는 것을 알 수 있다. (완벽하게 복원되지 않음)

사이킷런 TruncatedSVD 클래스를 이용한 변환

from sklearn.decomposition import TruncatedSVD,PCA

from sklearn.datasets import load_iris

import matplotlib.pyplot as plt

%matplotlib inline

iris = load_iris()

iris_ftrs = iris.data

#2개의 주요 컴포넌트로 TruncatedSVD 변환

tsvd = TruncatedSVD(n_components=2)

tsvd.fit(iris_ftrs)

iris_tsvd = tsvd.transform(iris_ftrs)

#산점도 2차원으로 TruncatedSVD 변환된 데이터 표현, 품종은 색깔로 구분

plt.scatter(x=iris_tsvd[:,0],y=iris_tsvd[:,1],c=iris.target)

plt.xlabel('TruncatedSVD Component 1')

plt.ylabel('TruncatedSVD Component 2')

품종별로 어느 정도 클러스터링이 가능할 정도로 각 변환 속성으로 뛰어난 고유성을 가지고 있다.

iris data 스케일링 변환 후 TruncatedSVD 와 PCA 클래스 변환

from sklearn.preprocessing import StandardScaler

scaler = StandardScaler()

iris_scaled = scaler.fit_transform(iris_ftrs)

#스케일링된 데이터를 기반으로 TruncatedSVD 변환

tsvd = TruncatedSVD(n_components = 2)

tsvd.fit(iris_scaled)

iris_tsvd = tsvd.transform(iris_scaled)

#스케일링된 데이터 기반으로 PCA 변환 수행

pca = PCA(n_components=2)

pca.fit(iris_scaled)

iris_pca = pca.transform(iris_scaled)

#TruncatedSVD 변환 데이터를 왼쪽, PCA 변환데이터를 오른쪽

fig,(ax1,ax2) = plt.subplots(figsize=(9,4), ncols=2)

ax1.scatter(x=iris_tsvd[:,0],y=iris_tsvd[:,1],c=iris.target)

ax2.scatter(x=iris_pca[:,0],y=iris_pca[:,1],c=iris.target)

ax1.set_title('Truncated SVD Transformed')

ax2.set_title('PCA Transformed')

print((iris_pca-iris_tsvd).mean())

print((pca.components_-tsvd.components_).mean())

모두 0에 가까운 값으로 2개의 변환이 거의 동일함을 알 수 있다. 즉 데이터셋이 스케일링으로 데이터 중심이 동일해지면 사이킷런의 SVD와 PCA는 서로 동일한 변환을 수행한다.

하지만 PCA는 밀집행렬에 대한 변환만 가능하며, SVD는 희소 행렬에 대한 변환도 가능하다.

SVD는 PCA와 유사하게 컴퓨터 비전 영역에서 이미지 압축을 통한 패턴 인식과 신호 처리 분야에 사용된다. 또한, 텍스트의 토픽 모델링 기법인 LSA의 기반 알고리즘이다.

'🛠 Machine Learning > 차원 축소' 카테고리의 다른 글

| [Python]NMF (0) | 2022.03.08 |

|---|---|

| [Python] LDA(Linear Discriminant Analysis) (0) | 2022.03.07 |

| [Python] PCA 예제 (0) | 2022.03.06 |

| [Python] PCA(Principal Component Analysis) (0) | 2022.03.05 |